Google introduced their home-baked machine learning chips at last year’s I/O. Known as Tenor Processing Units, TPUs for short, these chips were later known to be around 15 to 30 times faster than traditional CPUs and GPUs.

TPUs are designed to accelerate machine learning processing on Google Cloud Platform and reduce the time required to train and run TensorFlow-based AI models. Google is already using TPUs in their data centers.

Now, as a part of a limited beta program, Google has made the second generation of chips available for public use under the name Cloud TPU. But using the same doesn’t come cheap. It is up for trial for anyone who can spend $6.5 per Cloud TPU per hour. For a start, Google is providing a variety of open source reference ML models for Cloud TPU ranging across image classification, object detection, etc.

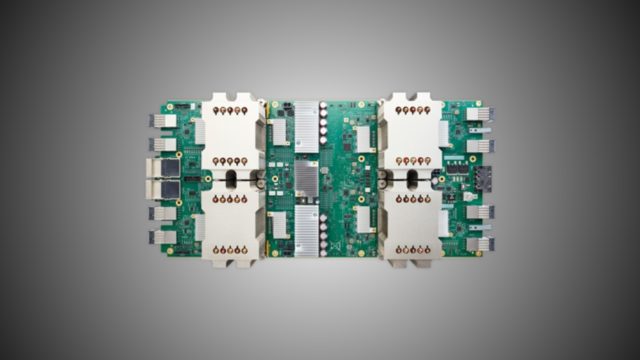

Each TPU board packs four custom ASICs, floating-point performance of up to 180TFlops, and 64GB memory. Google says multiple TPU boards can be combined over a dedicated network to form supercomputers which the company calls TPU Pods. During tests, Google used a TPU Pod (with 64 TPUs) to train the ResNet-50 model in less than 30 minutes, down from 23 hours when using a single TPU.